The Generative Edge Week 15

Generative video continues to blow our minds, will language models finally be set free, segmenting anything and Westworld meets The Sims.

Welcome to The Generative Edge for week 15. Generative media has seen some exciting new things this week, as well as more projects pushing for truly open and free AI. Let’s dive right in!

Generative video is getting here fast!

Just a couple of weeks ago we discussed the first tentative steps into generative video, followed by even longer coherent generated videos just a week after that.

Things have really started to shape up and generative video has turned from a fun novelty meme machine to something that is much closer to being ready for prime time. Watch the following video, still plenty of weird AI artefacts, but so so much better than what came before:

RunwayML is a research lab focusing on multimodal creative tools

They have just released their generation 2 generative video model (paper here)

Runway’s approach involves adding depth information and a few other tricks, to really improve on the generated video output

Multimodal generative video From janky, barely recognizable toy to something that is actually close to usable - all in the matter of a few weeks.

As we said before, expect this to really blow up in the near future - this stuff will democratize video/movies like nothing else, and we will lead to an explosion of content.

A truly open and free LLM chatbot

Alternative models to GPT3/4 have been popping up, but they are either burdened by license restrictions or can only be queried via an API (and don’t quite stack up to ChatGPT). Open Assistant could be the answer.

Open Assistant is a project meant to give everyone access to a great chat-based large language model.

The vision of the project is to make a large language model that can run on a single high-end consumer GPU.

The project aims to collect high-quality human-generated Instruction-Fulfillment samples (the sort of dialog you have with ChatGPT) through a crowdsourced process

Gamification and crowdsourcing You can already check out the model, as well as the gamified data collection process here open-assistant.io/dashboard

Keep an eye on this project, it might be the first that gives us a truly open, truly free LLM chatbot.

Generate images by segmenting anything

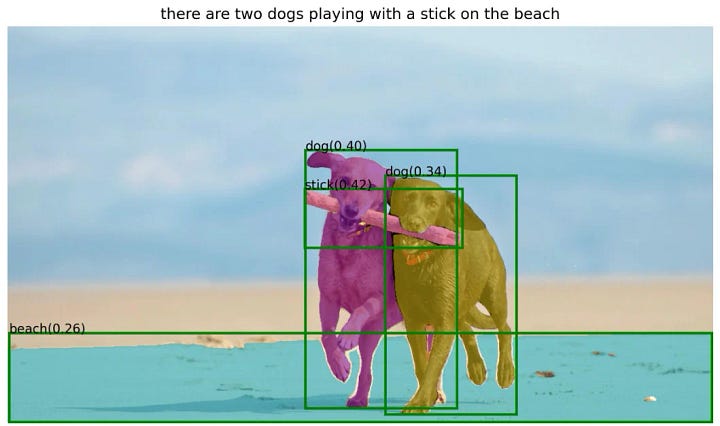

Last week Meta released Segment Anything, a system that can take any image and break it down into segments. For example, a picture of dogs running at the beach turns into 4 segments, two dogs, a stick and the beach.

The model is fully open source and incredibly robust and being able to reliably identify segments of an image is super useful for some generative image tasks, and naturally the generative AI community has already built on top of it:

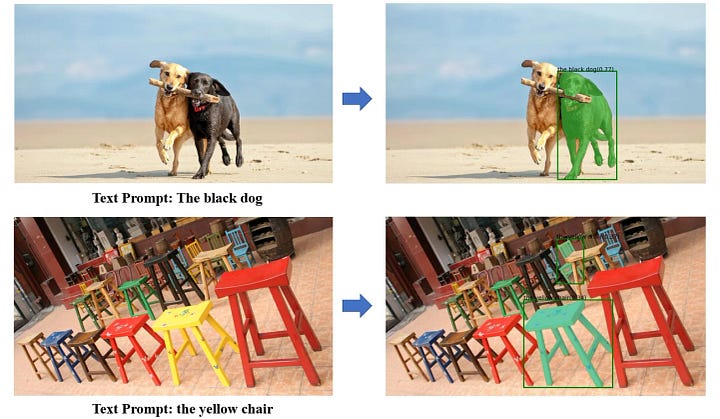

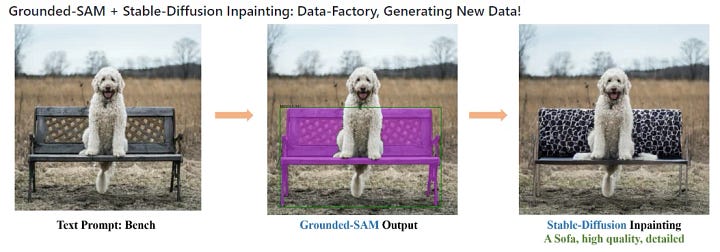

Grounded Segment Anything is a combination of multiple models, including but not limited to Stable Diffusion

It can reliably identify and segment any object in an image and change it, remove it and add to it etc.

Change someone’s hair, clothing, furniture? It’s never been easier or more robust.

Expect this to find its way into creative pipelines almost immediately (similar to ControlNet)

… and what else?

Stanford/Google released a wild paper in which they simulate agents with GPT4 (think The Sims meets Westworld. Agents that live their lives, interact with-one another, even plan parties. Check out this thread, it’s worth it.

LAION releases a petition, demanding the opposite of last week’s open letter: a firm push forward and a kind of CERN for AI

And that’s it for this week!

Find all of our updates on our Substack at thegenerativeedge.substack.com, get in touch via our office hours if you want to talk professionally about Generative AI and visit our website at contiamo.com.

Have a wonderful week everyone!

Daniel

Generative AI engineer at Contiamo