The Generative Edge Week 25

GAIA dreams of endless roads, Stable Diffusion's successor is out and textbooks are all you need.

Welcome to week 25 of The Generative Edge. Here is the gist in 5 bullet points:

GAIA-1 is a generative AI that uses a "world model" to create highly realistic driving scenarios, improving training for autonomous vehicles and potentially helping them make better decisions.

Stability AI's upcoming SDXL model is an advanced development in the Stable Diffusion text-to-image suite, promising improved performance while still being small enough to run on local hardware.

Researchers have discovered that smaller AI models can perform exceptionally well when trained on high-quality data, such as textbooks, emphasizing the importance of data quality over quantity.

This approach can lead to the creation of small, efficient expert systems that excel at specific tasks and can be easily operated locally, such as the phi-1 code model.

Other AI advancements include Meta's Voicebox for voice synthesis and pth.ai as an alternative problem-solving tool to dialogue-based chat systems.

GAIA-1 dreams of traffic

GAIA-1 is a generative AI that has been trained on copious amounts of UK traffic video data. In the process, it has developed a “world model”, which means it has an understanding of what’s happening in traffic and why, how roads work, what a house is, what a car is, how it moves, how pedestrians behave, the effect of weather and speed on traffic and so on. The interesting bit, it has learned things about the world that were not part of the training data.

GAIA-1 is an AI that generates highly realistic driving scenarios, enhancing the training process for autonomous vehicles using synthetic data.

Because the AI has built an understanding of the world that grasps key driving concepts, it can predict and create believable future driving situations.

GAIA-1 can simulate driving scenarios outside its training data, making it useful for testing AI models in rare or risky conditions.

The model can be prompted like any other generative AI model, it can be prompted to speed up, take a left turn, drive in circles, produce specific scenes or modify existing ones.

This will have implications for autonomous driving, as it could improve the car’s ability to simulate future scenarios to make better descisions by generating them.

Having a “world model” is also considered an important corner stone for any progress towards AGI.

Prompt: Driving zig zag through the world:

Prompt: Going around a stopped bus

Stable Diffusion SDXL

Stability AI, the company behind a lot of the momentum behind Stable Diffusion is about to release their successor, SDXL. In their own words, SDXL is “the most advanced development in the Stable Diffusion text-to-image suite of models.”

Stable Diffusion 1.5 has been extremely popular in the community, with extremely powerful systems like ControlNet building on top of it

SDXL is a foundational model with a larger parameter count that original Stable Diffusion, while it’s still small enough so that it can be run on local hardware

Let’s see if this model (to be released open in July) can and will be incorporated into the existing workflows, if things like fine-tuning are easy to do and if ControlNet can be easily adapted to it - we remain hopeful, an overall improved base model would be a welcome addition.

Textbooks are all you need

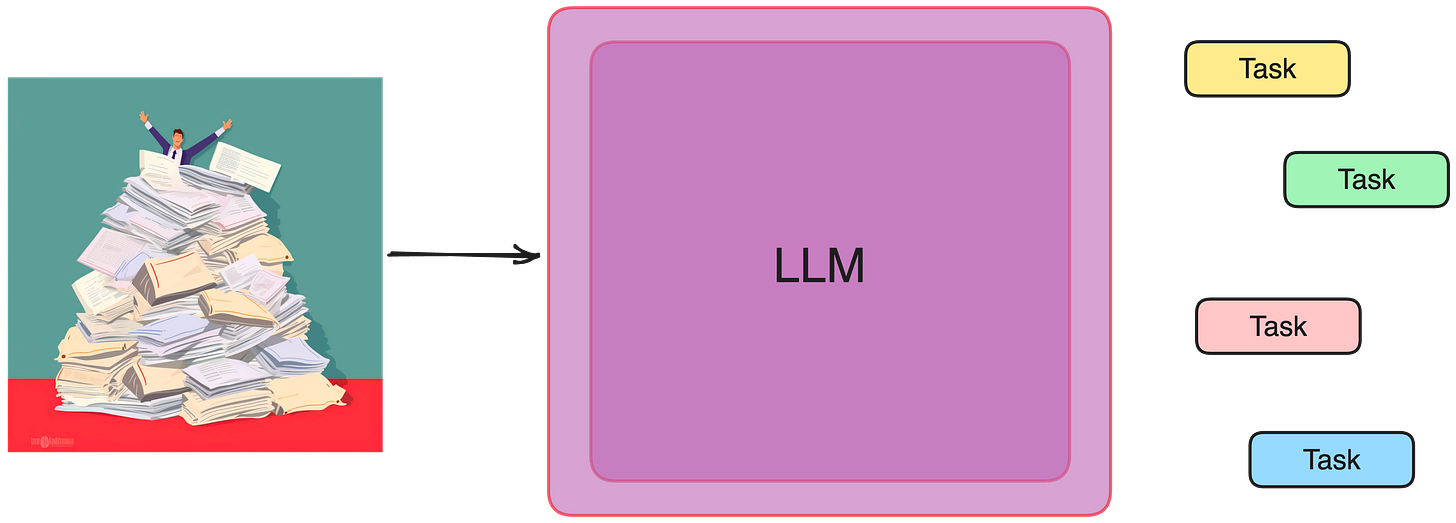

LLMs like GPT-4 are trained on MASSIVE amounts of text data, blogs, reddit posts, textbooks, papers, etc. The resulting language models are very large, and can do a lot of things. Operating these large models is a big task, and very expensive. How can we create small models that are very good at some things?

Smaller AI models can shine when trained on high-quality text (like textbooks), assume that data quality matters more than just having tons of data.

Researchers used ChatGPT to create even more textbook-like content, which they used to train a new model that is very small but surprisingly powerful.

An interesting thought, AI models becoming smarter by learning from themselves; it might open up new ways to develop even better AI systems.

There is a lot of potential in smaller AI expert models trained on high quality data (generated or not)

This is how it works:

You use a LOT of text data when pre-training your large language model.

What happens if you only use very high quality data, like textbooks, on certain topics, to train a model?

Turns out, you get a very small model that is very good at some tasks.

We can even generate some textbooks with a large model like GPT-4 and use that to train our small model even more.

We end up with something like phi-1, a code model that is quite good at what it can do, despite it’s tiny size of 1.3B (for comparison, GPT-4 is roumored to be 8 x 220B)

… and what else?

Meta’s Voicebox is very good at synthesizing voice, pth.ai is a cool problem helper and an alternative to pure dialog based chat

And that’s it for this week!

Find all of our updates on our Substack at thegenerativeedge.substack.com, get in touch via our office hours if you want to talk professionally about Generative AI and visit our website at contiamo.com.

Have a wonderful week everyone!

Daniel

Generative AI engineer at Contiamo